UAV LIDAR mapping for crop fields

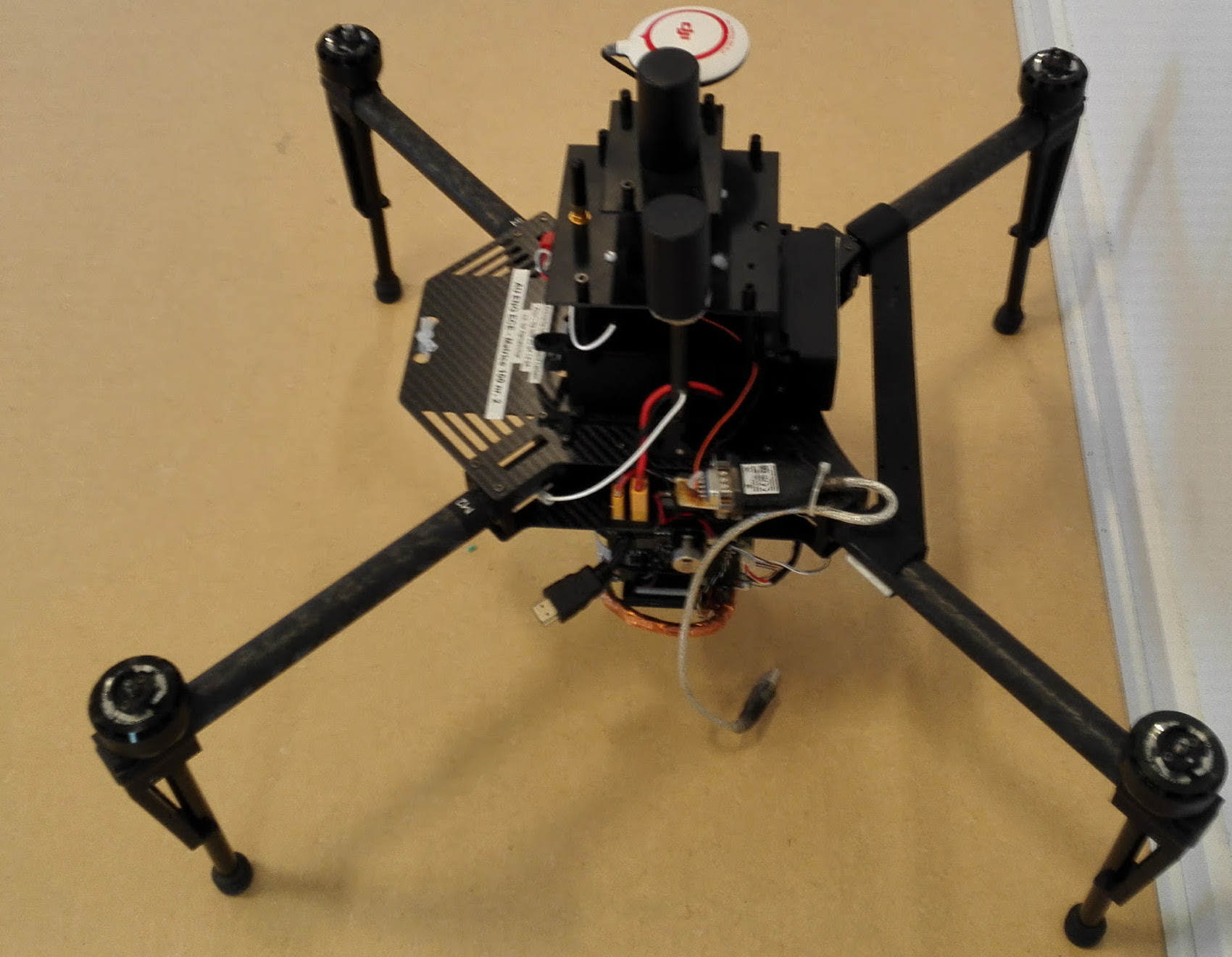

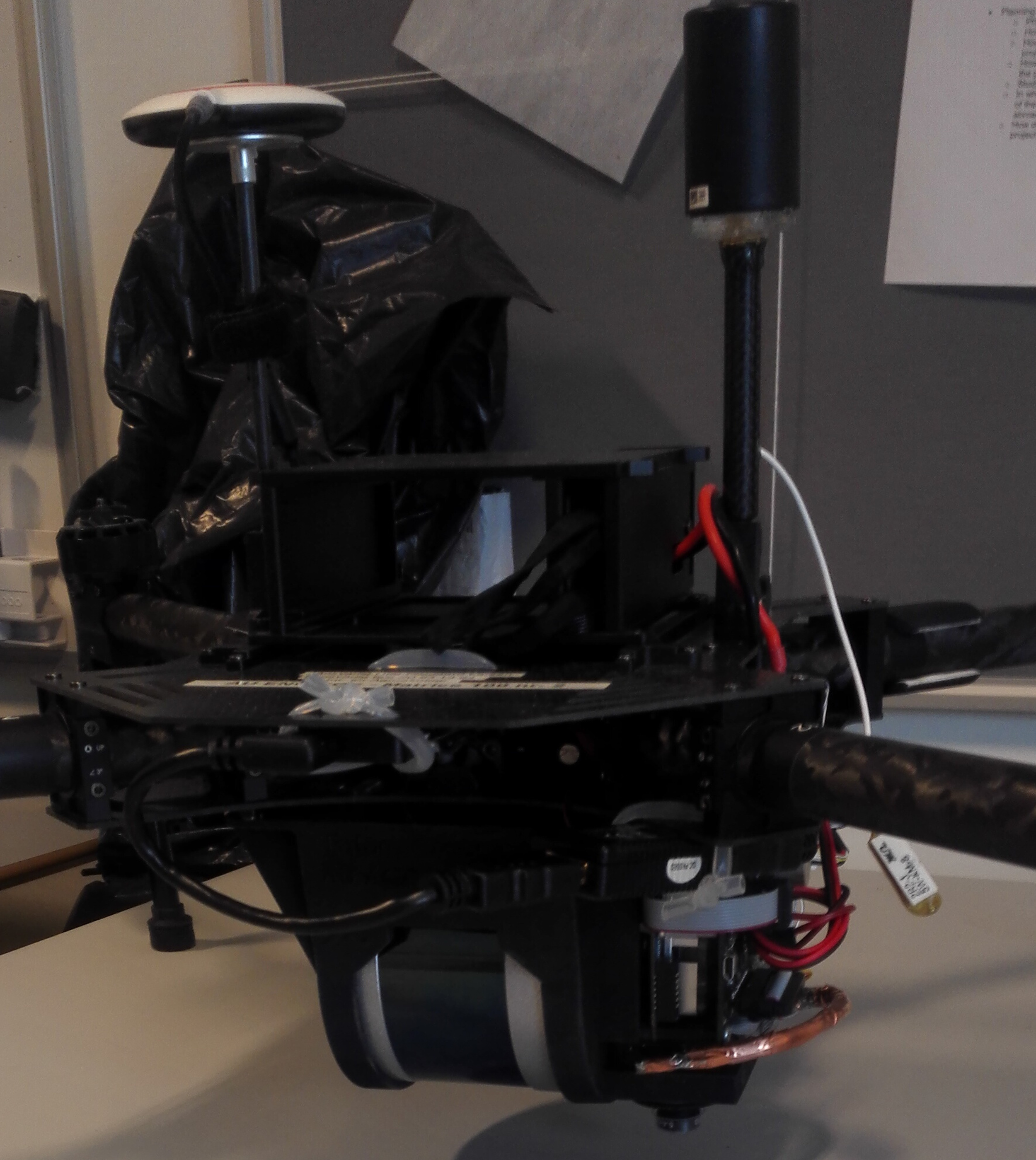

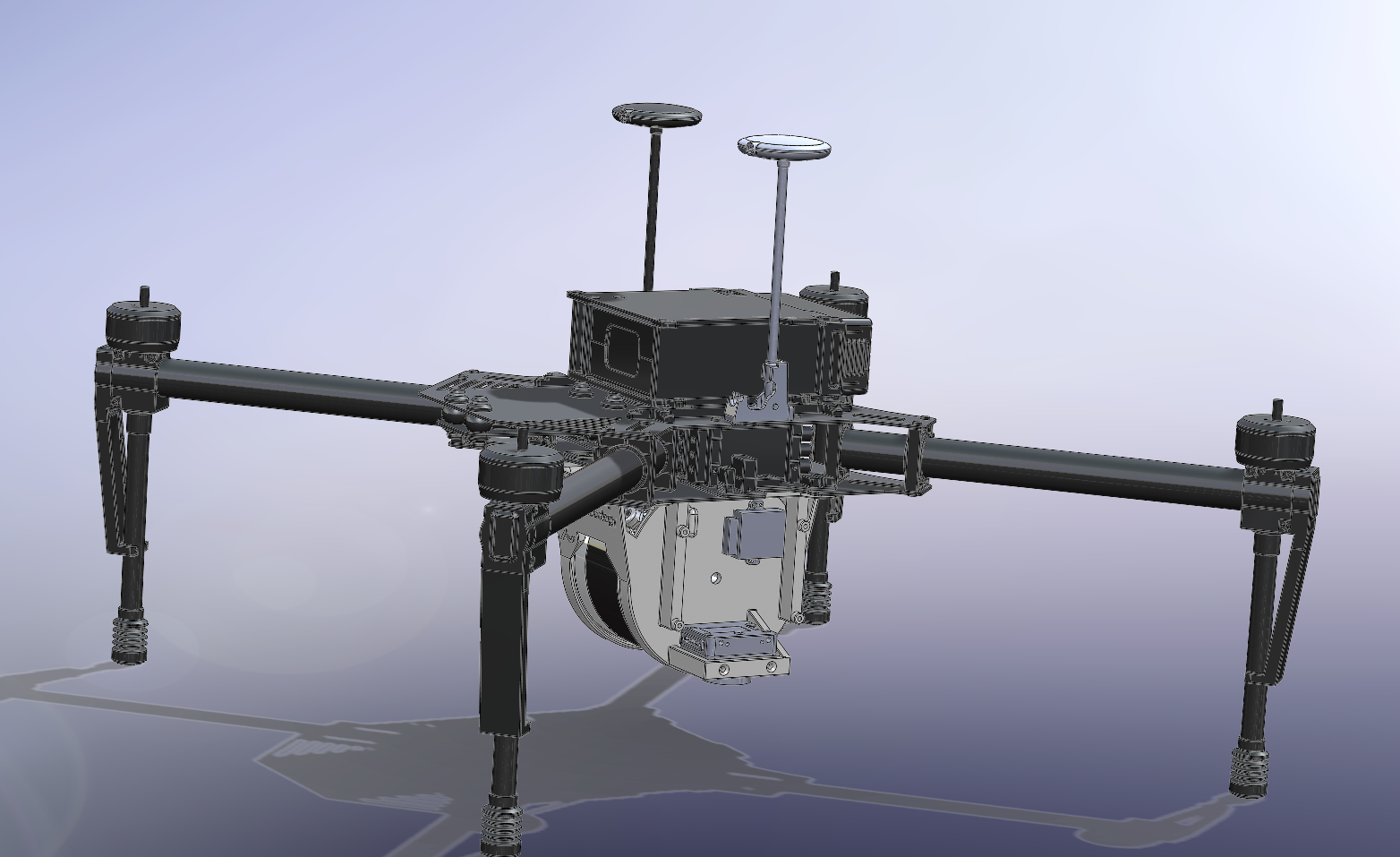

In this project, we attempt to use LIDAR data recorded via UAV to map and estimate the volume of crops in an experimental field. Our multi sensor was specifically designed for mapping agriculture field area’s, by for allowing for simultaneous recording of LIDAR and RGB spatial sensory data at low altitudes. A DJI Matrice 100 unmanned aerial vehicle (UAV) with firmware v. 1.3.1.10 and a TB48D battery pack was used for the project.

The purpose of the performed experiment was to create 3D LIDAR point-clouds of the field enabling canopy volume and textural analysis discriminating different crop treatments. We evaluate the mapped point-clouds for their accuracy in estimating the structure of the crop parcels. The estimated crop parcels structure is a significant factor since we intend to use it as an alternative method to determine the biomass of the crops. We expect that crop height and biomass accumulation will be visible in the LIDAR point clouds.

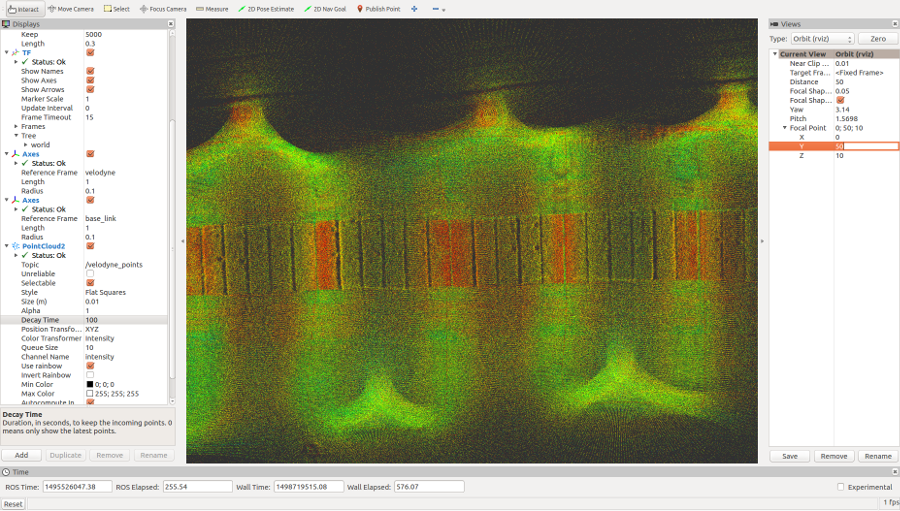

The video below was one of our first mapping attempts with our platform.

The accuracy has since become significantly better:

Hardware setup

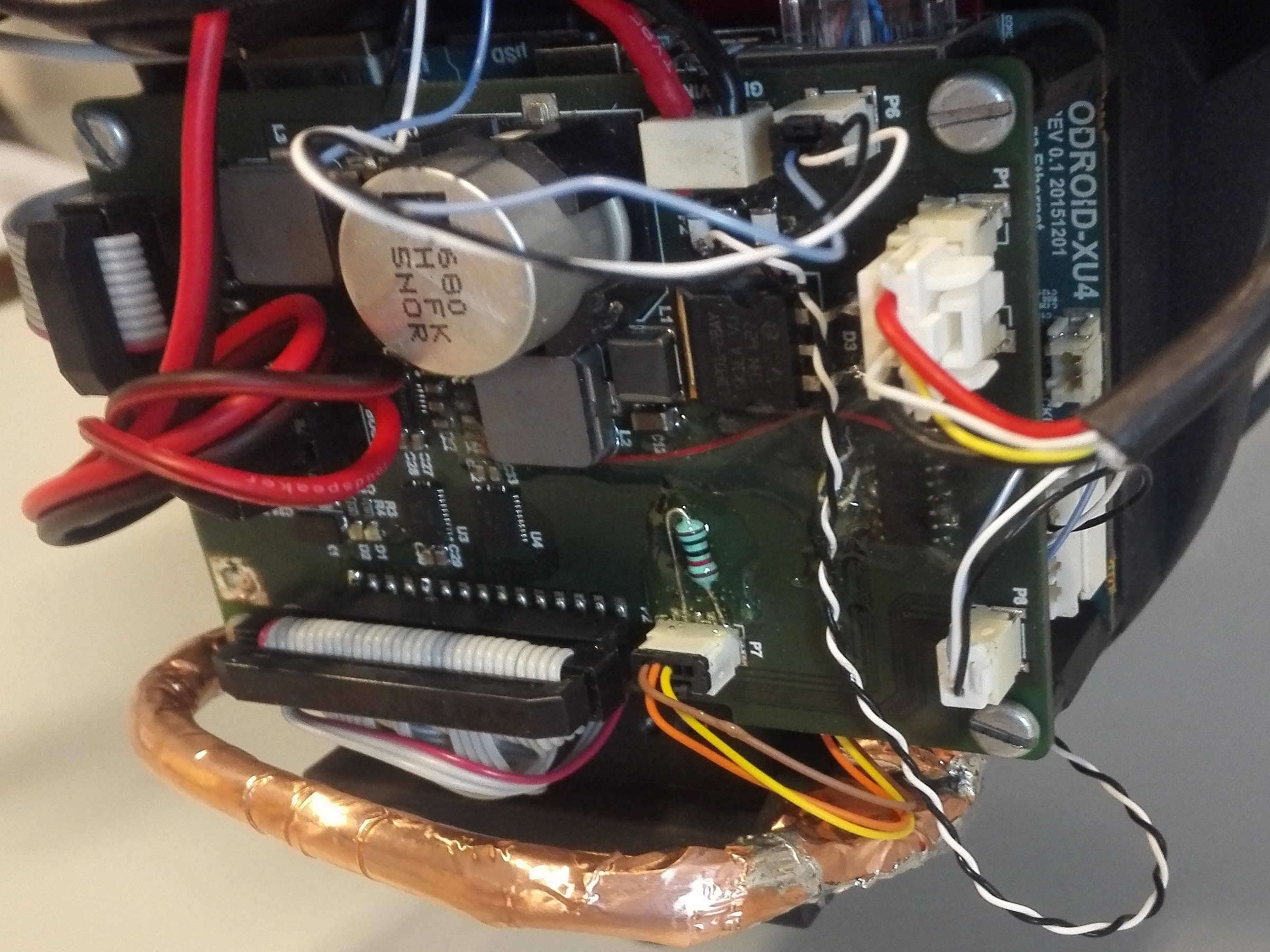

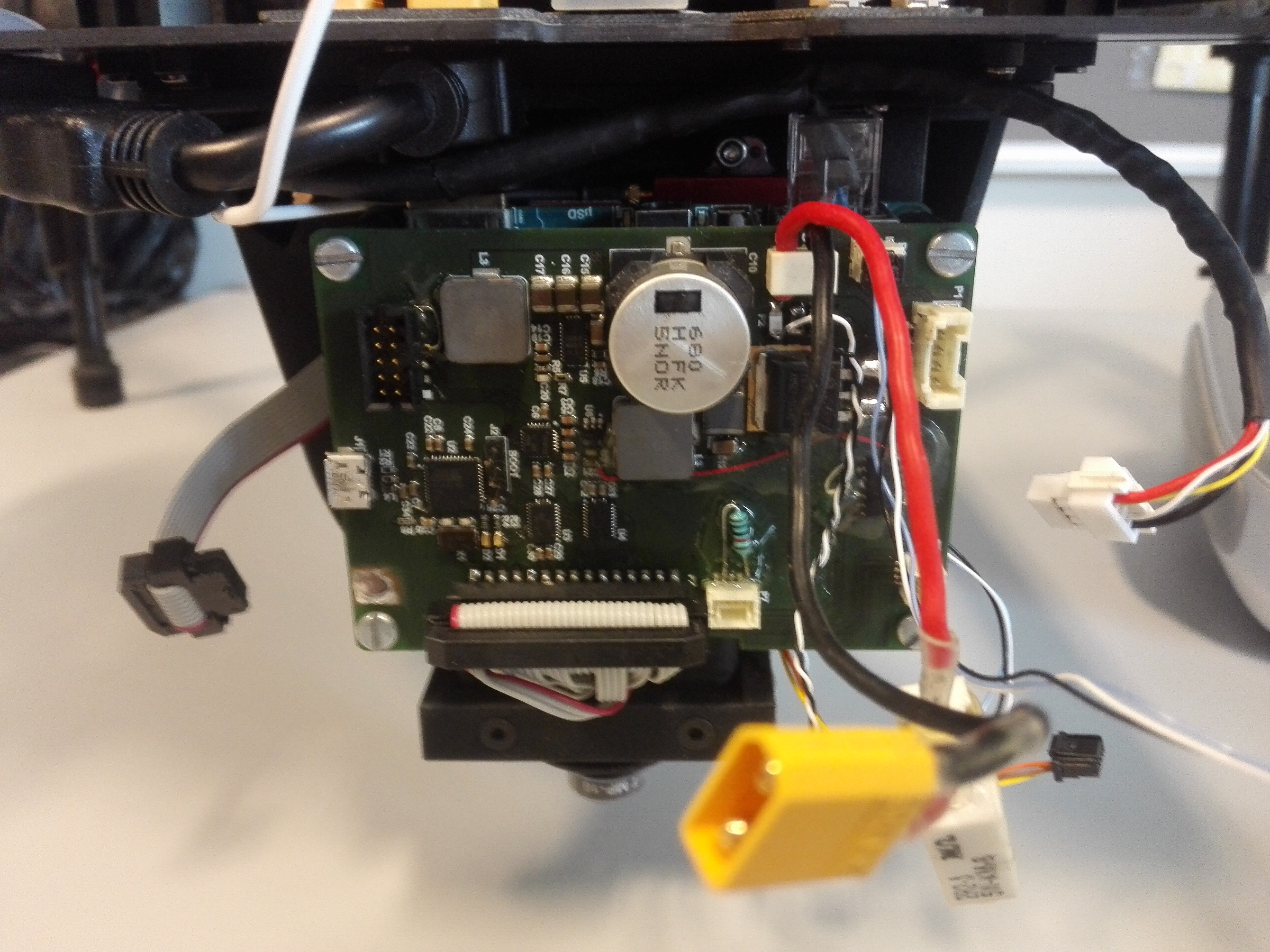

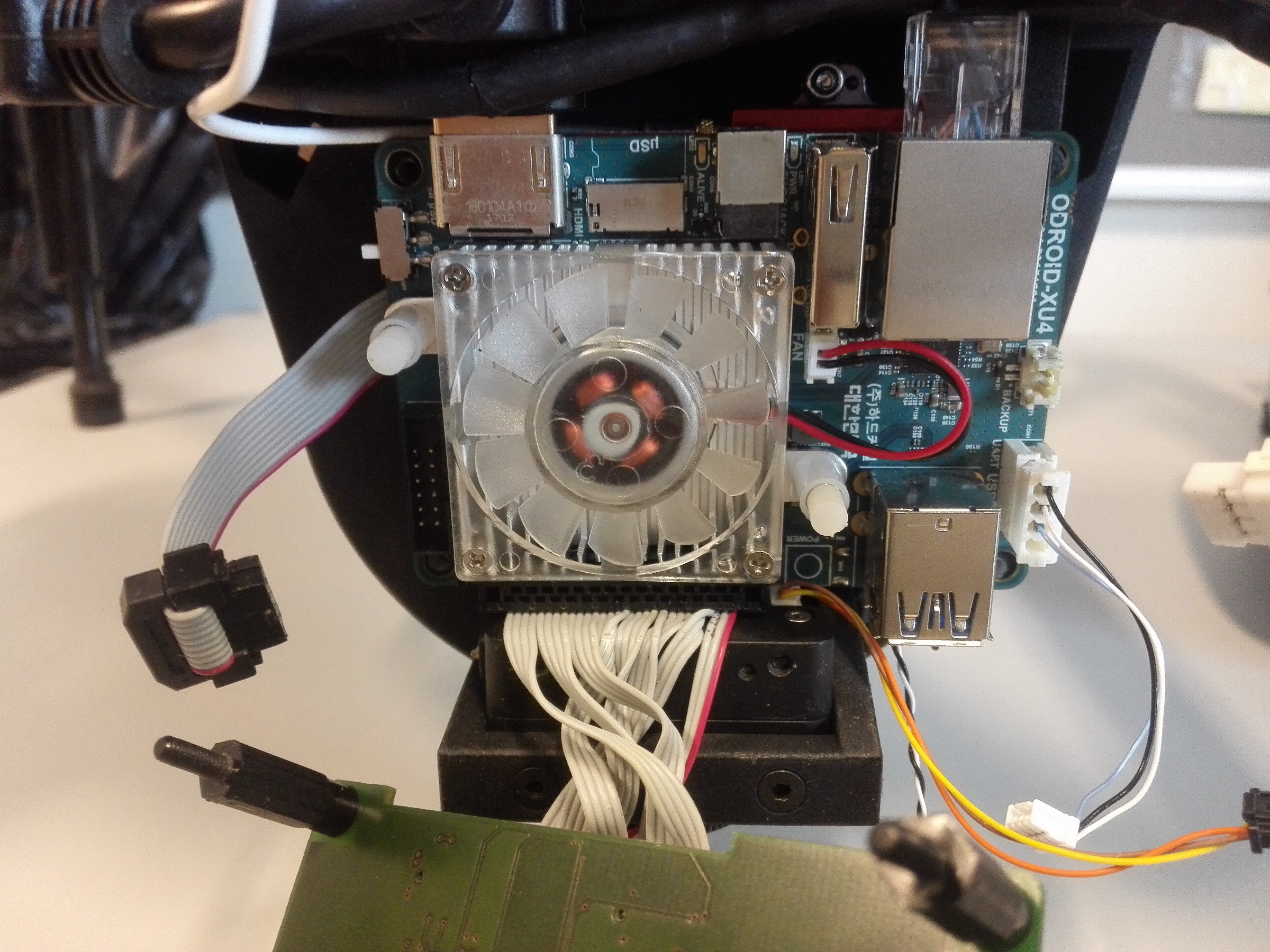

We use an Odroid XU4 with an in-house build extension board controlling IO and power for datalogging.

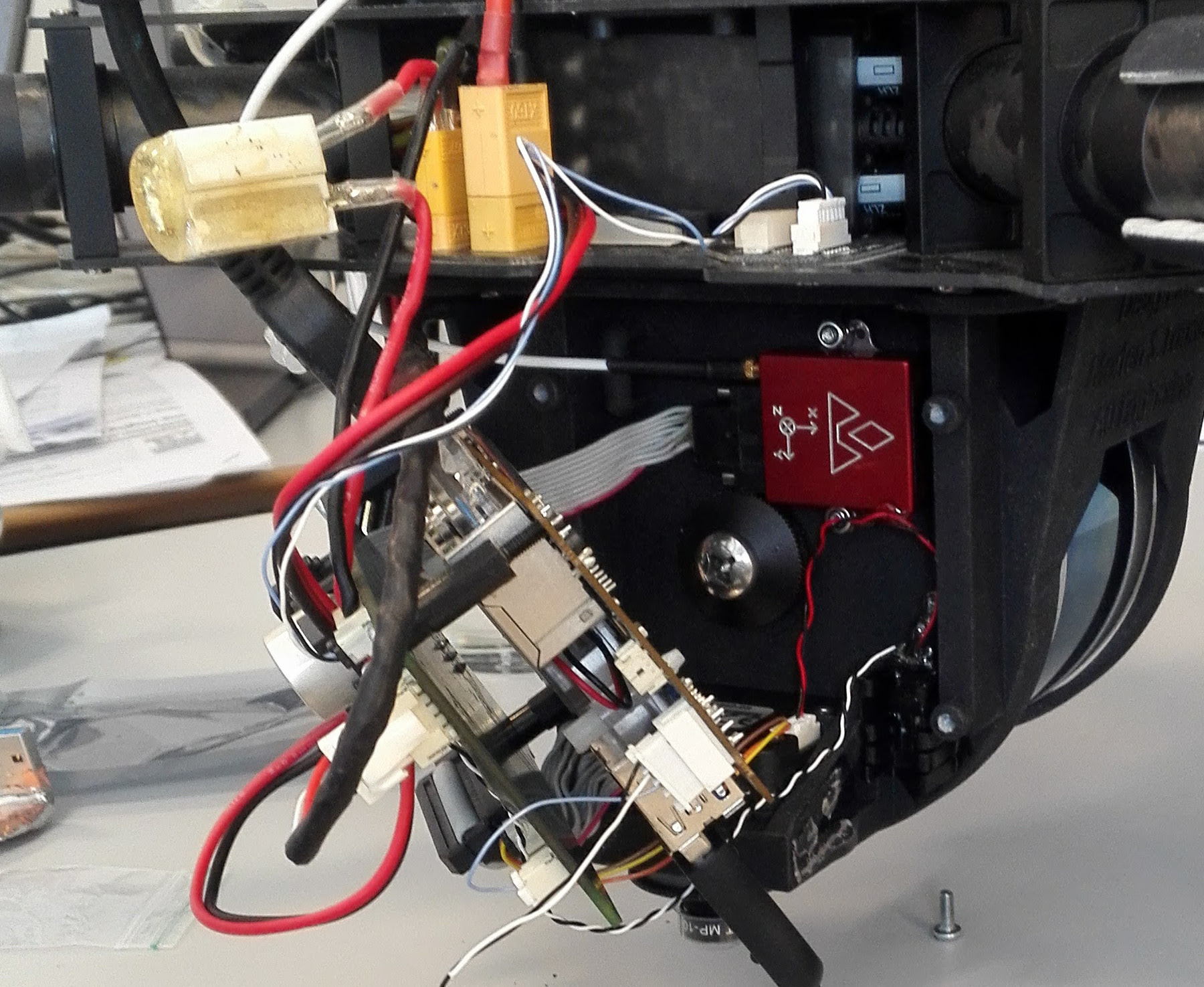

The sensor system consist of a Velodyne VLP-16 LIDAR that is connected via ethernet, a Point Grey Charmeleon3 3.2MP color camera with a Sony imx265 sensor connected via USB3, a Vectornav VN-200 with a MAXTENA M1227HCT-A2-SMA antenna connected via RS-232, a Trimble BD920 RTK-GNSS module connected via a USB-serial (RS-232) and a RS-232 serial connection to the DJI drone. Both camera and LIDAR is facing downwards since observations on the ground are the focus of this experiment.

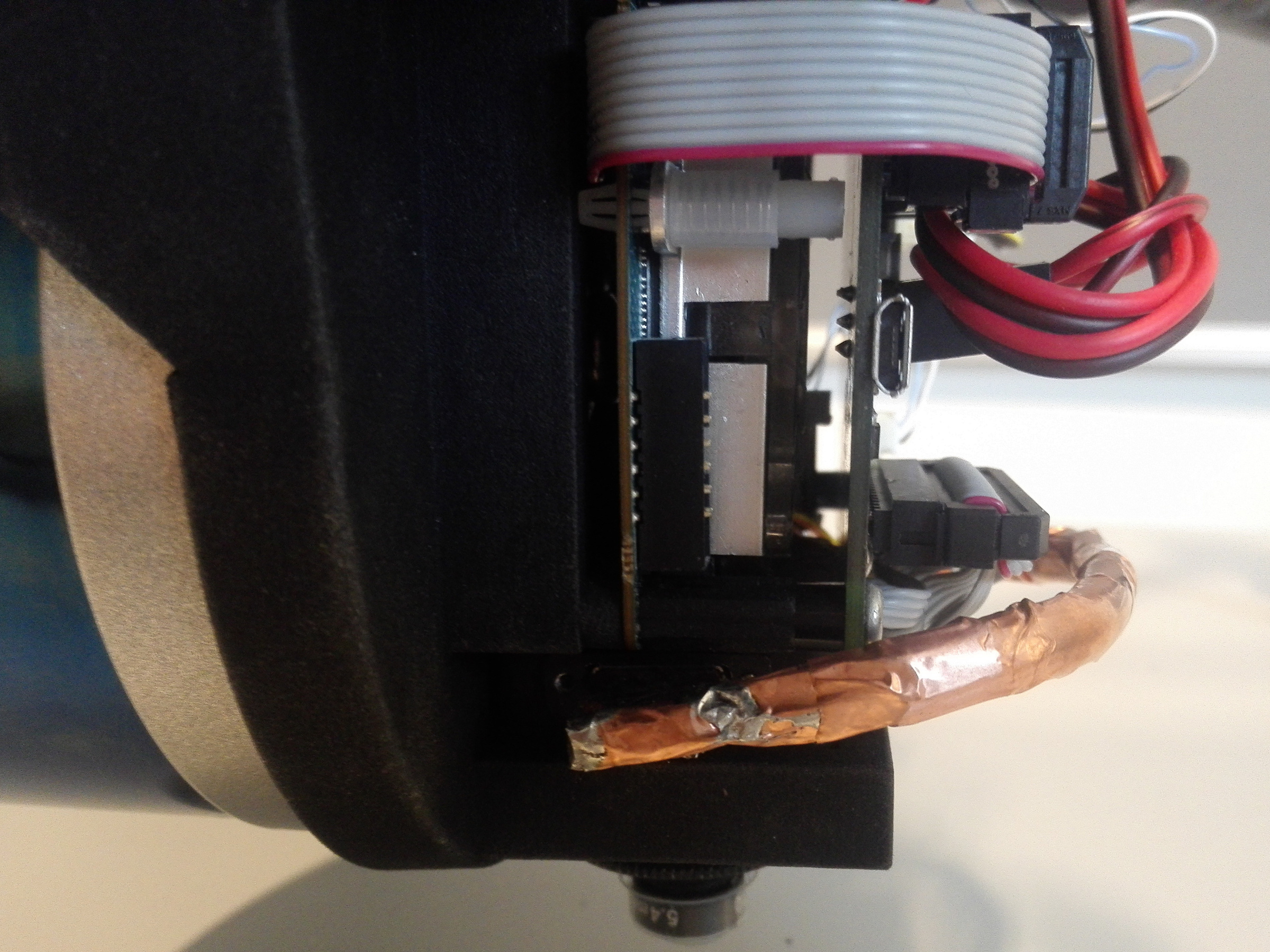

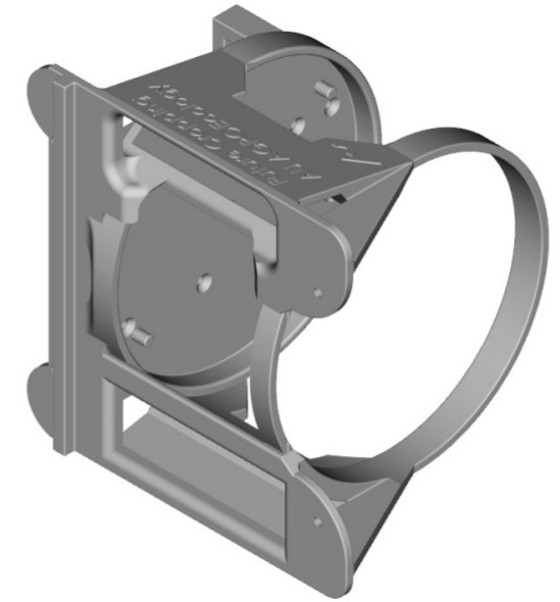

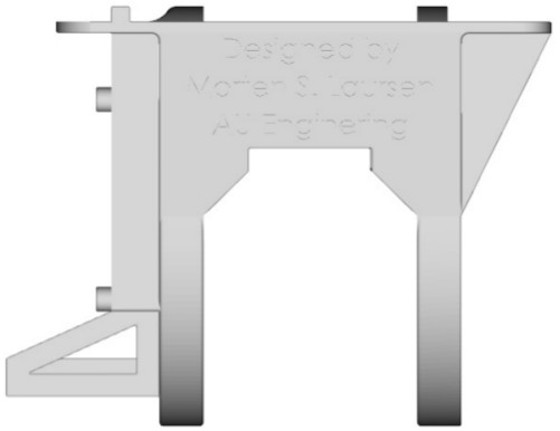

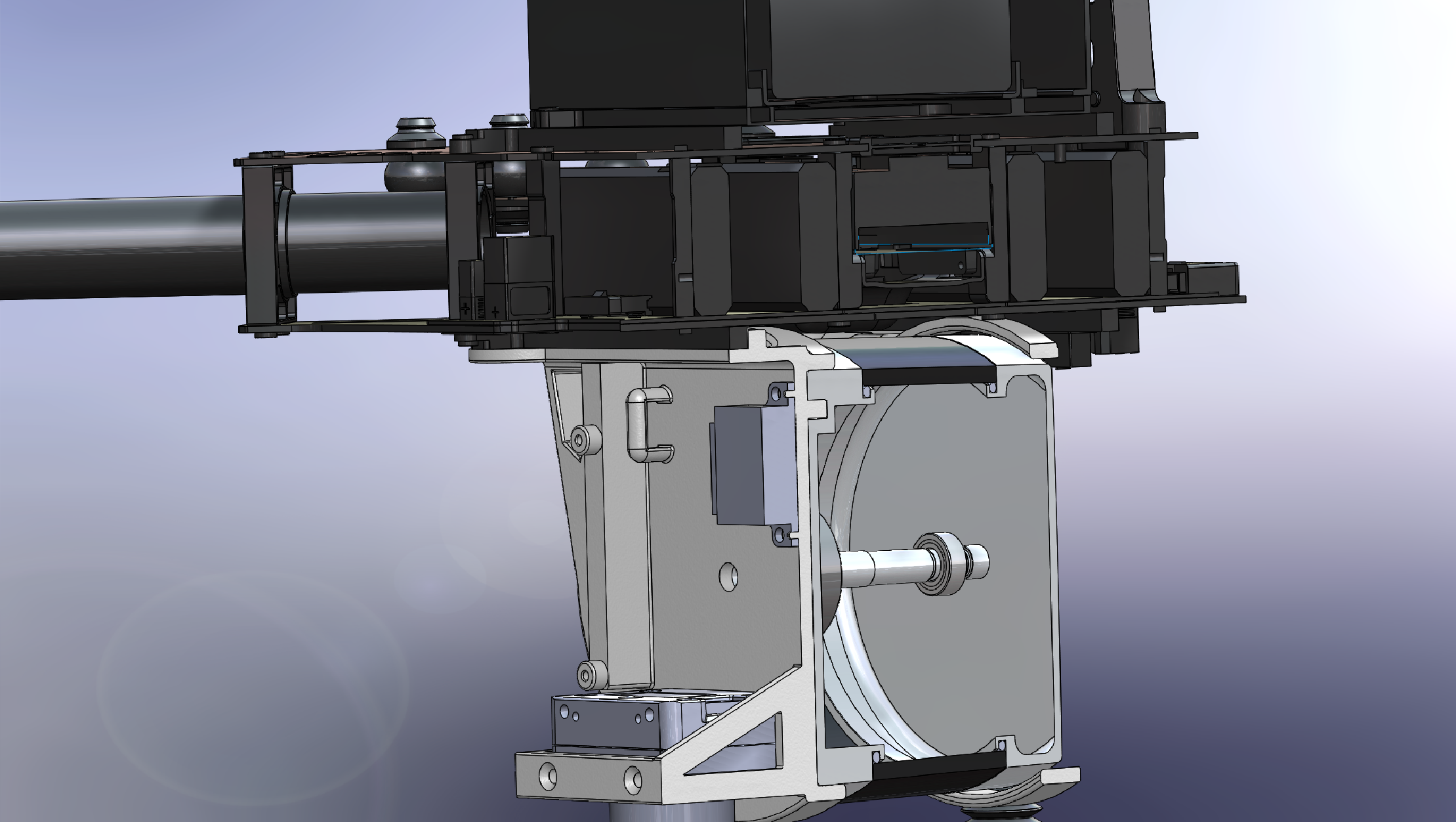

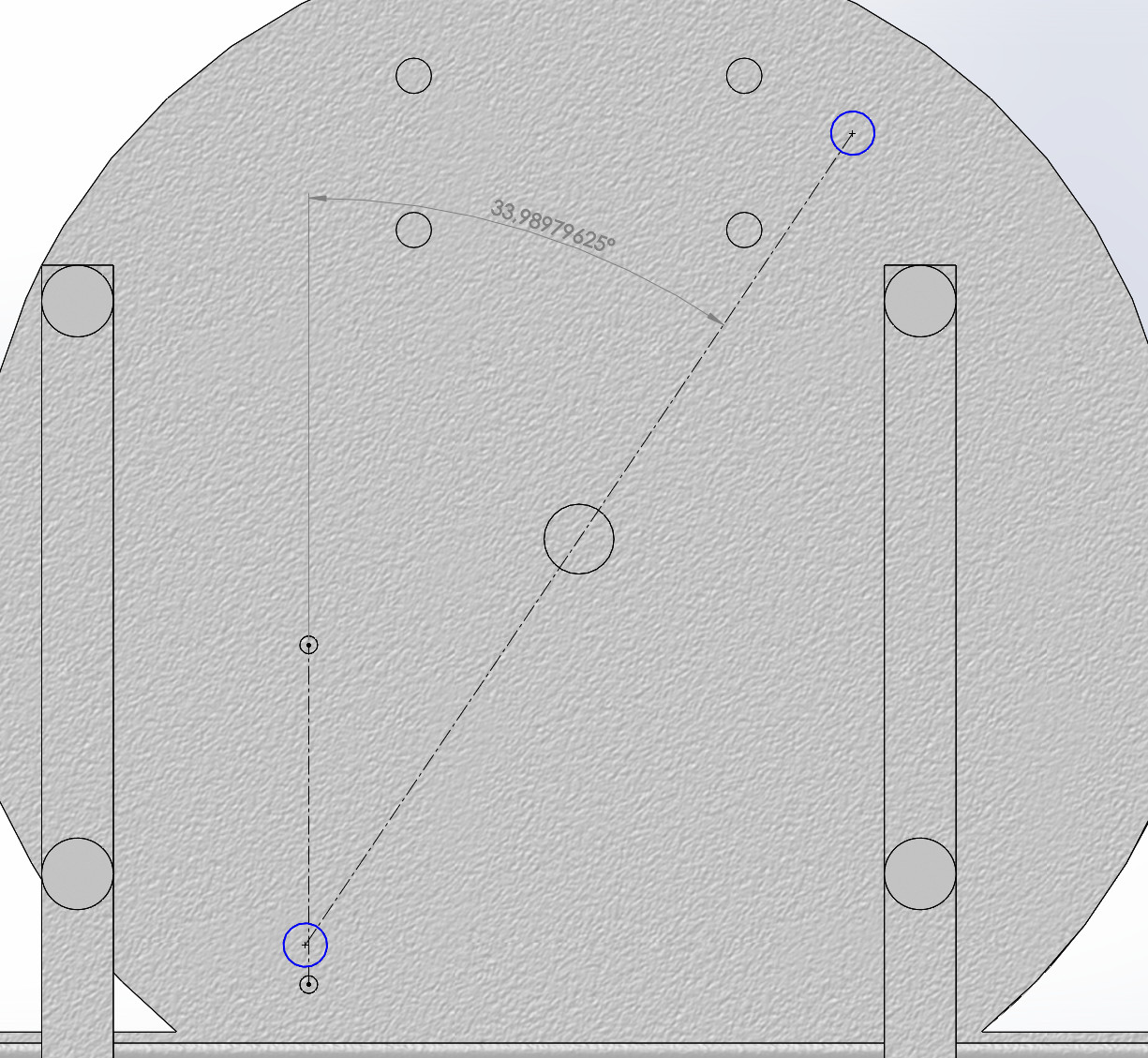

3D sensor mount

A sensor mount printed in 3D nylon has been designed to fit as the payload.

3D diagram and CAD model of the sensor mount, can be found in the links below:

SolidWorks part

Adobe 3D PDF of the part

STL CAD for printing

PDF of the STL CAD

3D SVG model

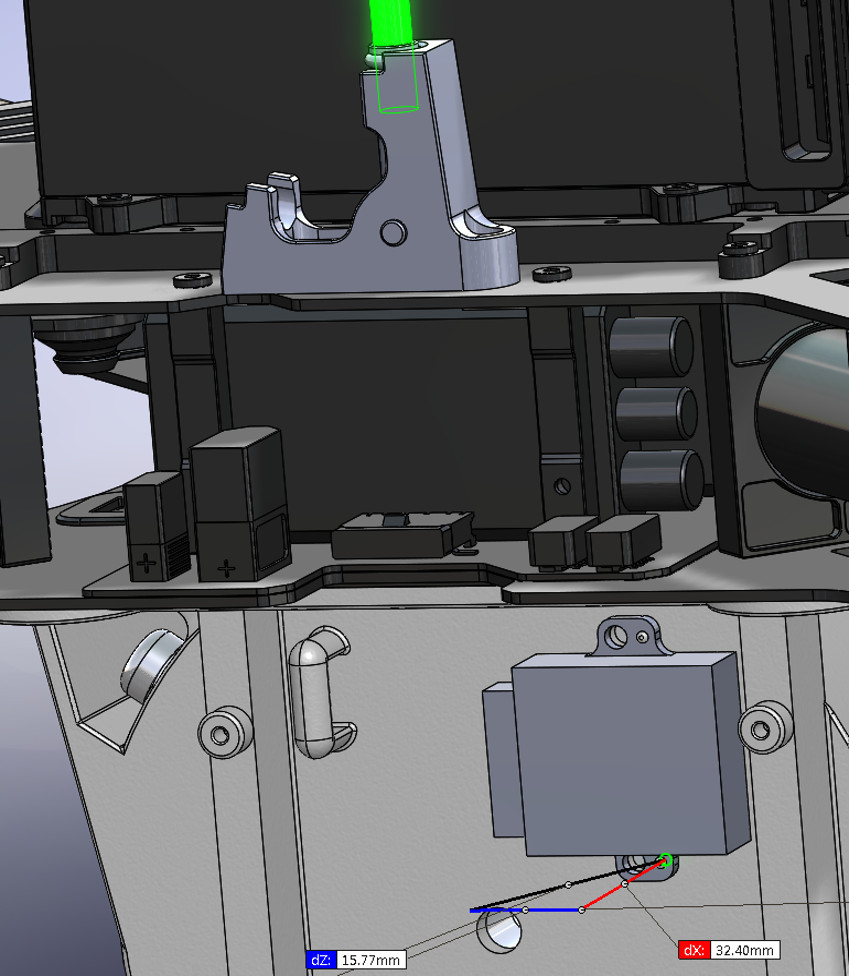

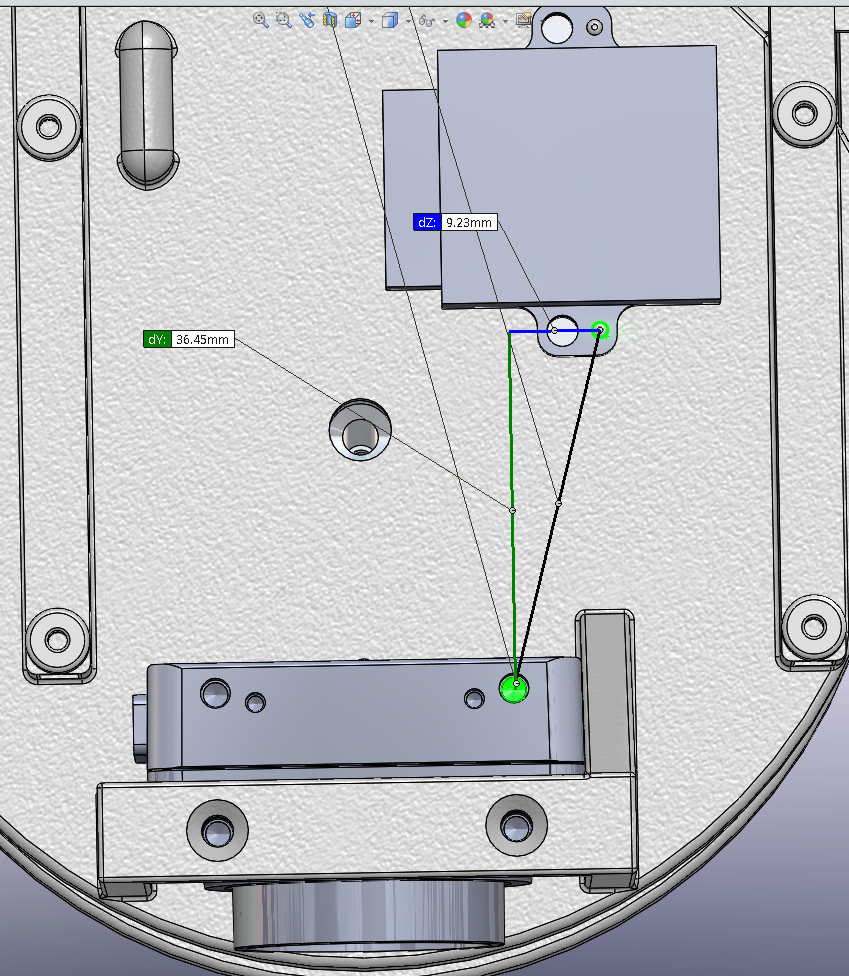

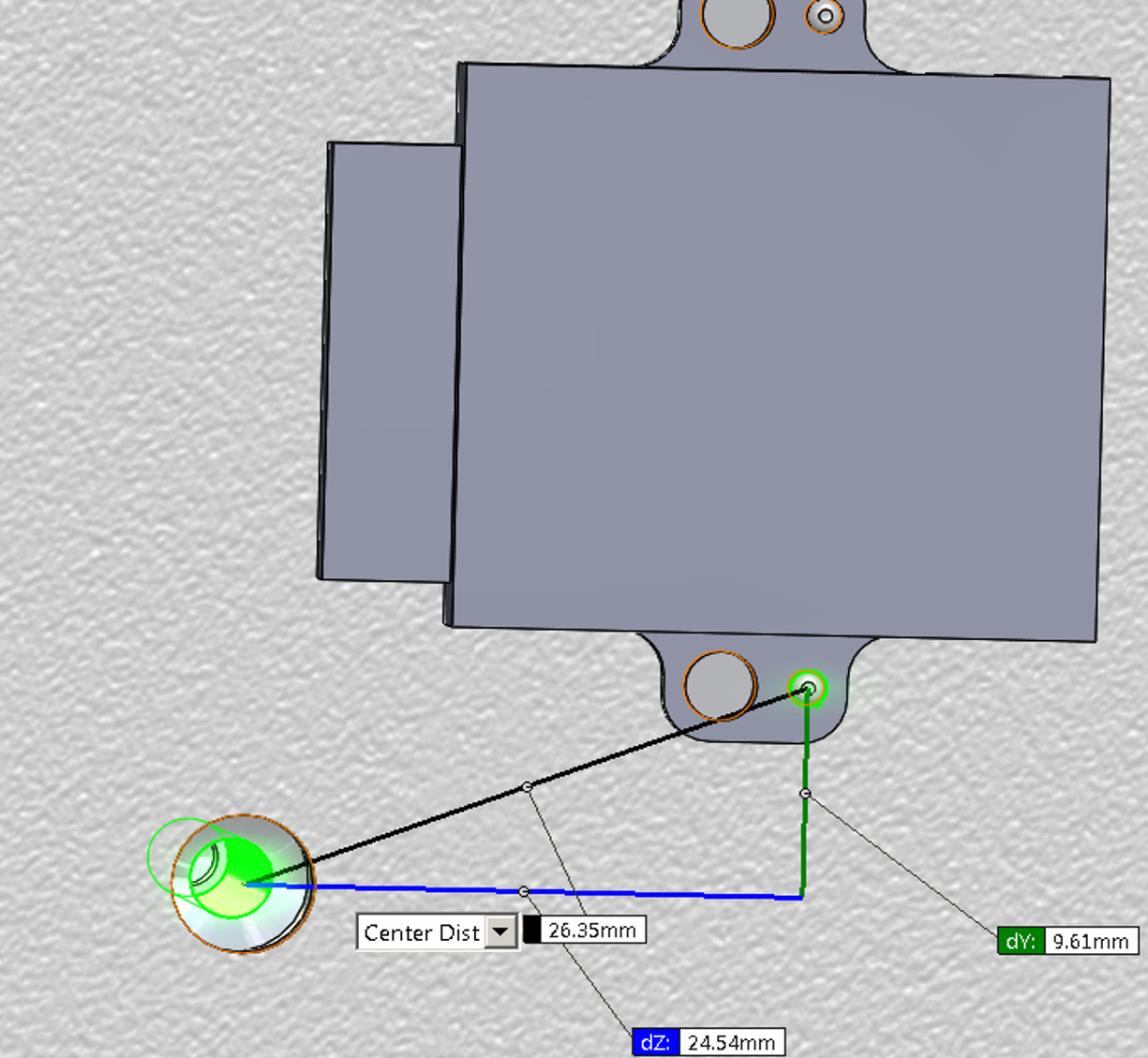

The VectorNav IMU and PointGrey Camera is mounted as illustrated, in the following image sequence:

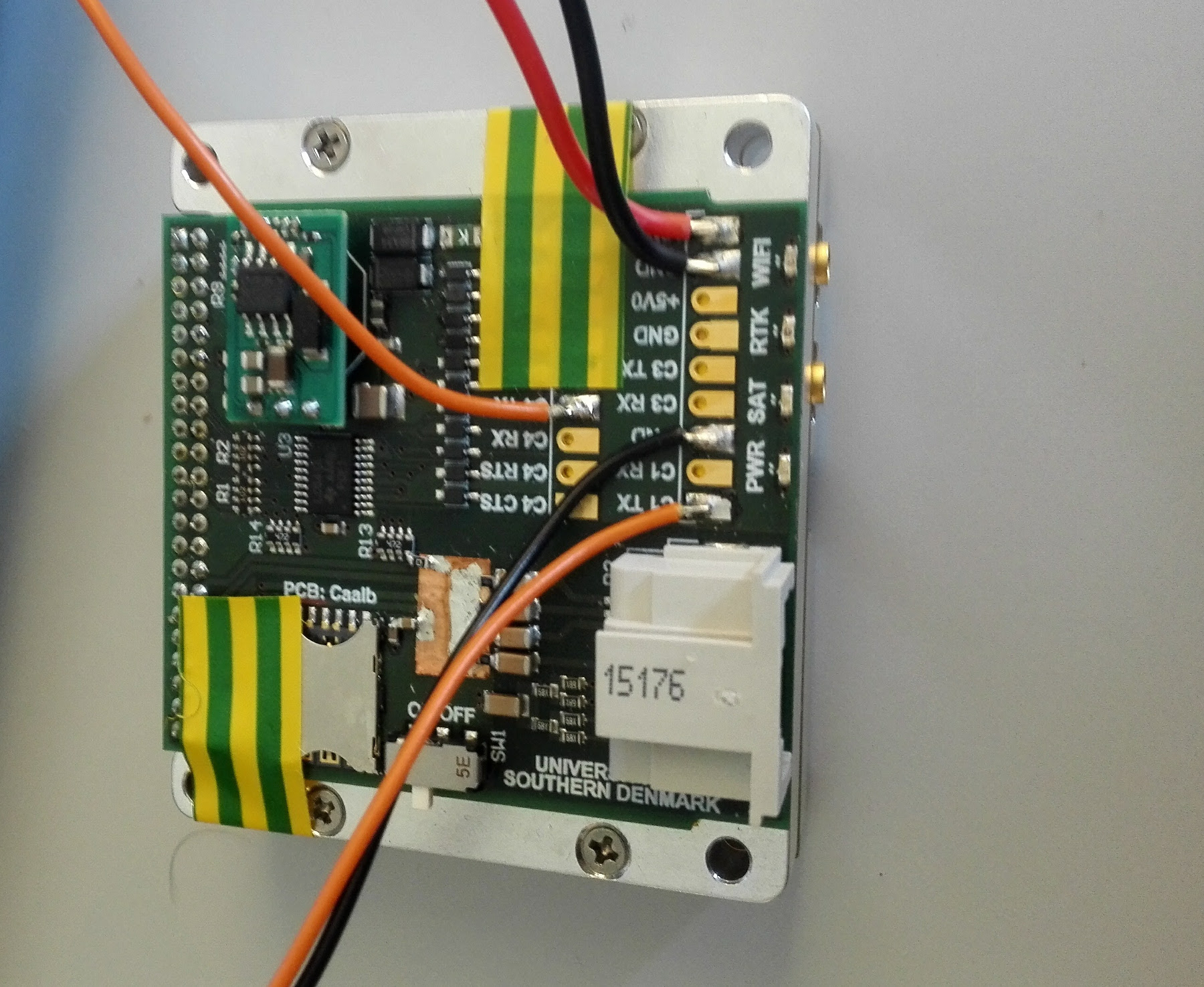

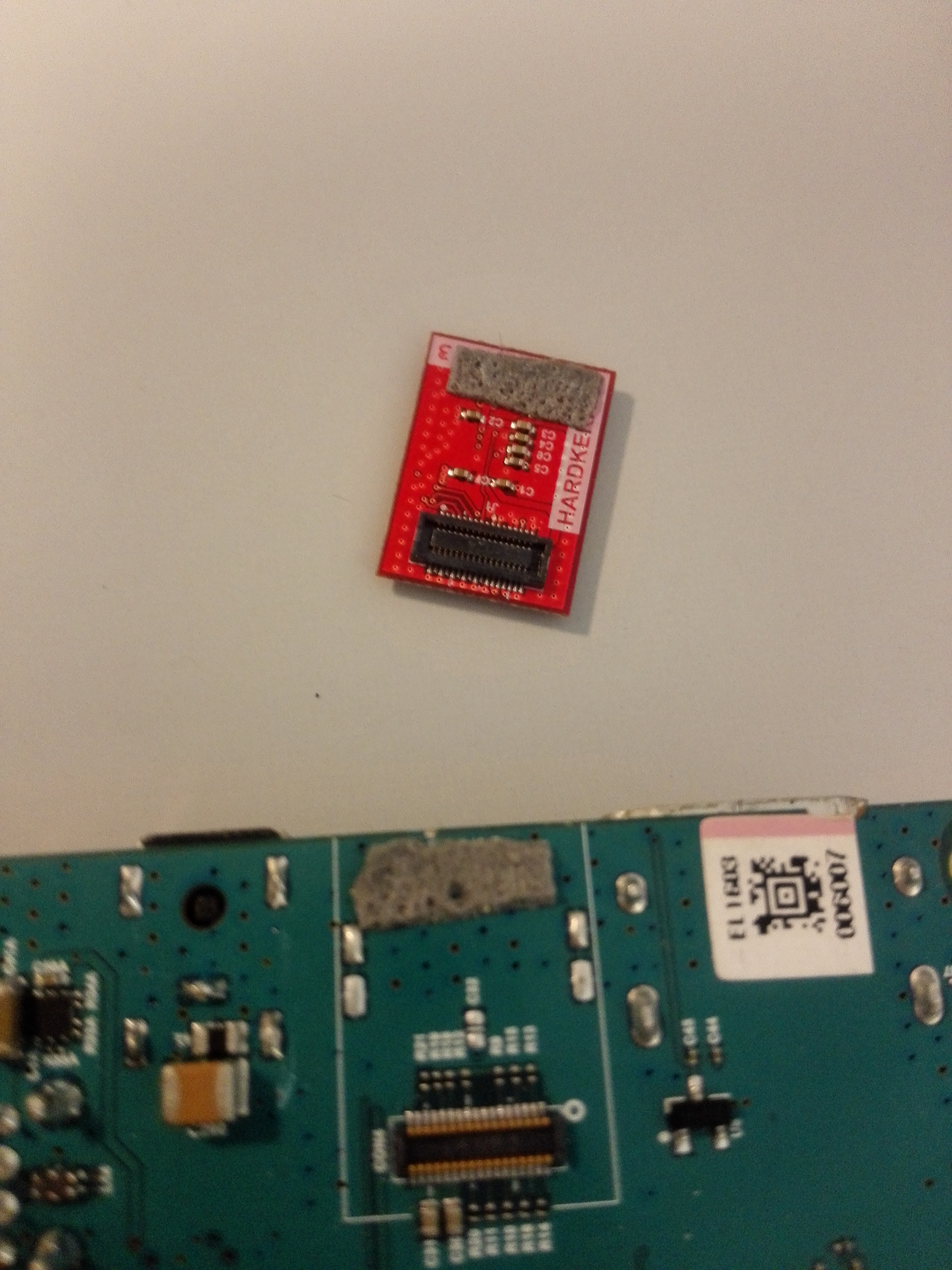

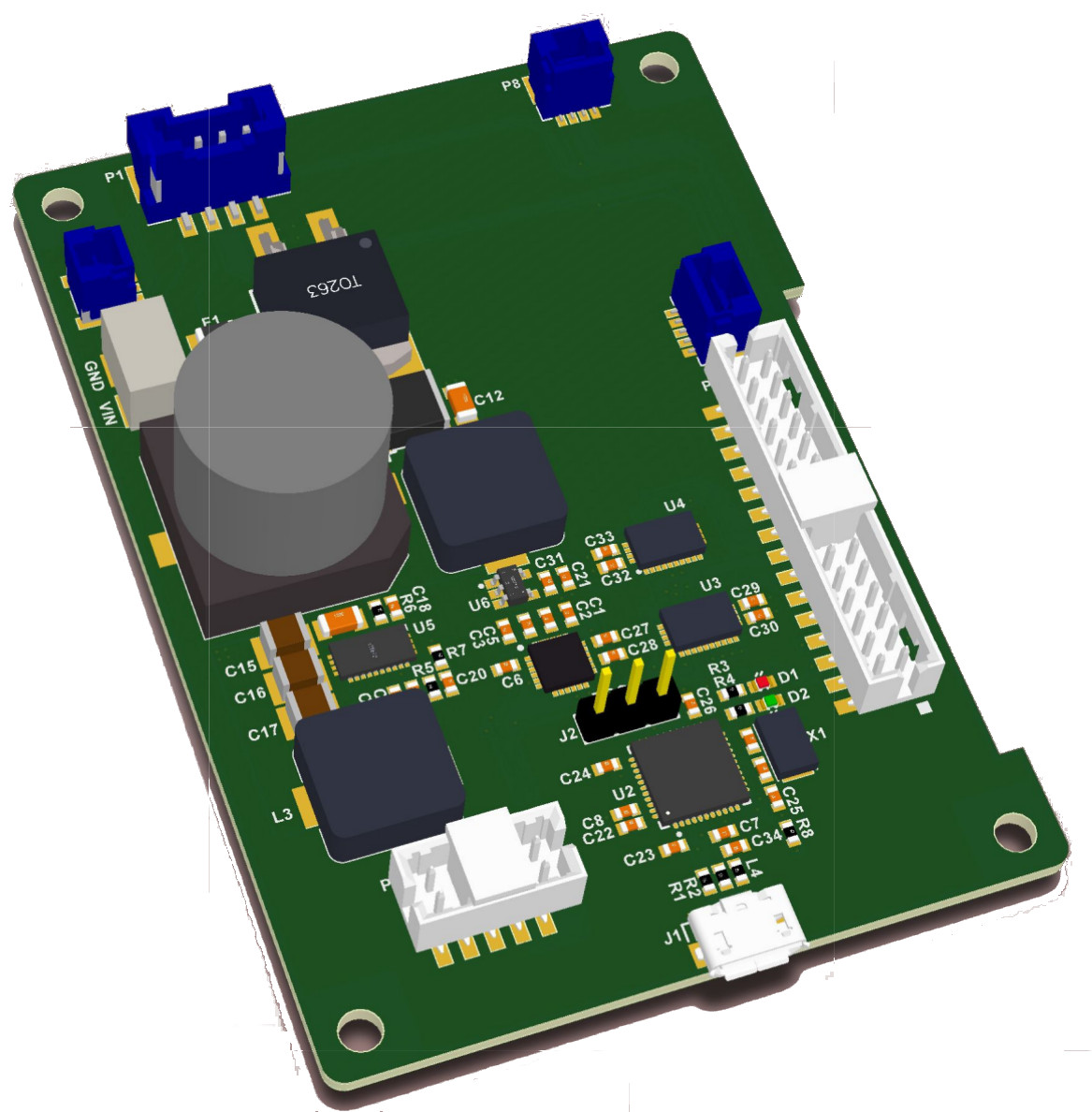

Extension board

We have updated the extension board schematic with the changes discovered in the current release:

Extension board schematic as PDF

Extension board Diagram in KiCAD

Bill of materials

This is a list of the major components, sub-components in the system, besides the extension board. The includes the prices at acquisition and payload weight contribution on the UAV. What is missing from the lsit is connectors and cables for wiring the system.

| Component | sub-component | Name | Part number | weight (gram) | Price (USD) (2016-05-31) |

|---|---|---|---|---|---|

| UAV | DJI Matrice 100 | Matrice 100 | 3299 | ||

| Camera | 564 | ||||

| Camera | Machine vision camera | Point Grey Chameleon3-32 | CM3-U3-31S4C-CS | 54.9 | 465 |

| Camera | Lense | 5.4mm f/2.5 60d HFOV 10MP | GP54025 | 6.3 | 99 |

| LiDAR | Velodyne VLP-16 | 80-VLP-16 | 830 | 7999 | |

| IMU/GNSS | 3422 | ||||

| IMU/GNSS | IMU | Vectornav VN-200 Rugged | VN-200 | 16 | 3200 |

| IMU/GNSS | GNSS antenna | Maxtena Antenna Unit | M1227HCT-A2-SMA |

23 | 222 |

| RTK-GNSS | 8460 | ||||

| RTK-GNSS | GNSS unit | Trimble BD920 GNSS | BD920 |

24 | 8238 |

| RTK-GNSS | GNSS antenna | Maxtena Antenna Unit | M1227HCT-A2-SMA |

23 | 222 |

| Computer | 146 | ||||

| Computer | main computer board | ODROID-XU4 | ODROID-XU4 |

60 | 59 |

| Computer | storage | 64GB eMMC Module | XU4 eMMC Module |

1 | 63 |

| Computer | usb-serial | Mitsubishi USB serial | UC232A USB-RS232 |

? | 24 |

Software setup

UAV recording setup

To record sensory data, we used the Robot Operating System (ROS) running on top of Ubuntu 14.04 armhf with ROS release indigo. The ubuntu-armhf image with ROS nodes and software libraries, can be downloaded here:

Ubuntu-armhf image for the UAV

For the individual ROS nodes, they can be checked out using the following commands:

git clone git@bitbucket.org:auengagroactu_sens/velodyne_vlp16.gitgit clone git@bitbucket.org:auengagroactu_sens/vectornav.gitgit clone git@bitbucket.org:auengagroactu_sens/dji_matrice100_onboard_sdk_ros.gitgit clone git@bitbucket.org:auengagroactu_sens/pointgrey_camera_driver.git

Local development

For local development on your laptop we recommend using Ubuntu 16.04 and ROS kinetic:

Ubuntu install of ROS Kinetic

Currently we use the

sudo apt-get install ros-kinetic-desktop-full

If you use Ubuntu, you need to install the following to compile the code:

sudo apt-get install libpcl-dev libpcl1.7 libpcl1-dev python-pcl-msgssudo apt-get install libusb-1.0-0 libusb-dev libusb-1.0-0-devsudo apt-get install libexif-dev python-opencvsudo apt-get install ros-kinetic-cv-bridge ros-kinetic-cv-camera

To send the new source to target, we recommend using rsync via SSH.

ATmega32U4 code

The code for the ATmega32U4 code can be checked out here:

git clone git@bitbucket.org:auengagroactu_sens/atmega_uav_lidar_board.gitThe pulse-per-second (PPS) signal from the VN-200 is used for sampling synchronization. The PPS signal is routed to the Velodyne VLP16 as an external time source. The Point Grey camera is triggered using a 10Hz signal (10x PPS), phase-locked to the PPS using a hardware timer in the ATmega32U4.

To compile the code on Ubuntu, you need the following packages installed:

sudo apt-get install avrdude gcc-avr binutils-avr gdb-avr avr-libcIf you want to program the ATmega32U4 via SPI, you should use this project instead of the normal avrdude:

kcuzner-avrdude with a Linux SPI programmer type

Example sensory data sets

We provided 3 example recorded data from the experimental field to the public.

All dataset are recorded as rosbags using the odroid platform.

To experiment with the data you need a similar software setup.

The built-in node rosbag in ROS was used to record data and timestamp for all active ROS nodes:

| Sensor output | Sample-rate | Notes |

|---|---|---|

| DJI ROS sdk | 50Hz | (DJI OS time, attitude Quaternion), Baud=230400 |

| VectorNav IMU (1) | 50Hz | (Gyro, Acceleration, Quaternion, TimeGps), Baud=115200 |

| VectorNav IMU (2) | 20Hz | (INS, TimeUTC, TimeGps, TimeSyncIn), Baud=115200 |

| VectorNav IMU (3) | 4Hz | (GPS, TimeUTC, TimeGps, Fix, sats), Baud=115200 |

| Velodyne Lidar | 10Hz | RPM=600, strongest return |

| Point Grey Camera | 10Hz | Resolution=2048×1536, 8 bits per pixel |

| Trimble GNSS (1) | 10Hz | GPGGA, Baud-rate=115200, usb-serial |

| Trimble GNSS (2) | 20Hz | GPRMC, Baud-rate=115200, usb-serial |

Recorded rosbag datasets

Example info printout from one of the rosbags about the topics:

topics: /camera/camera_info 501 msgs : sensor_msgs/CameraInfo

/camera/camera_nodelet/parameter_descriptions 1 msg : dynamic_reconfigure/ConfigDescription

/camera/camera_nodelet/parameter_updates 1 msg : dynamic_reconfigure/Config

/camera/camera_nodelet_manager/bond 102 msgs : bond/Status (2 connections)

/camera/image_raw 501 msgs : sensor_msgs/Image

/cloud_nodelet/parameter_descriptions 1 msg : dynamic_reconfigure/ConfigDescription

/cloud_nodelet/parameter_updates 1 msg : dynamic_reconfigure/Config

/diagnostics 99 msgs : diagnostic_msgs/DiagnosticArray (2 connections)

/dji_sdk/acceleration 2522 msgs : dji_sdk/Acceleration

/dji_sdk/activation 2521 msgs : std_msgs/UInt8

/dji_sdk/attitude_quaternion 2534 msgs : dji_sdk/AttitudeQuaternion

/dji_sdk/compass 51 msgs : dji_sdk/Compass

/dji_sdk/drone_task_action/status 254 msgs : actionlib_msgs/GoalStatusArray

/dji_sdk/flight_control_info 51 msgs : dji_sdk/FlightControlInfo

/dji_sdk/flight_status 509 msgs : std_msgs/UInt8

/dji_sdk/gimbal 2494 msgs : dji_sdk/Gimbal

/dji_sdk/global_position 2540 msgs : dji_sdk/GlobalPosition

/dji_sdk/global_position_navigation_action/status 253 msgs : actionlib_msgs/GoalStatusArray

/dji_sdk/local_position 2528 msgs : dji_sdk/LocalPosition

/dji_sdk/local_position_navigation_action/status 256 msgs : actionlib_msgs/GoalStatusArray

/dji_sdk/mission_event 2 msgs : dji_sdk/MissionPushInfo

/dji_sdk/odometry 2494 msgs : nav_msgs/Odometry

/dji_sdk/power_status 51 msgs : dji_sdk/PowerStatus

/dji_sdk/rc_channels 2515 msgs : dji_sdk/RCChannels

/dji_sdk/time_stamp 2502 msgs : dji_sdk/TimeStamp

/dji_sdk/velocity 2521 msgs : dji_sdk/Velocity

/dji_sdk/waypoint_navigation_action/status 257 msgs : actionlib_msgs/GoalStatusArray

/driver_nodelet/parameter_descriptions 1 msg : dynamic_reconfigure/ConfigDescription

/driver_nodelet/parameter_updates 1 msg : dynamic_reconfigure/Config

/fmData/rx 1527 msgs : msgs/serial

/rosout 59 msgs : rosgraph_msgs/Log (8 connections)

/rosout_agg 74 msgs : rosgraph_msgs/Log

/vectornav/binary_serial_data 3735 msgs : vectornav/serial_data

/vectornav/gps/data 202 msgs : sensor_msgs/NavSatFix

/vectornav/imu/data 2523 msgs : sensor_msgs/Imu

/vectornav/vn_gps 204 msgs : vectornav/gps

/vectornav/vn_imu 2530 msgs : vectornav/vectornav_imu

/vectornav/vn_ins 1015 msgs : vectornav/ins

/vectornav/vn_sync_in 504 msgs : vectornav/sync_in

/velodyne_nodelet_manager/bond 205 msgs : bond/Status (3 connections)

/velodyne_packets 506 msgs : velodyne_msgs/VelodyneScan

/velodyne_points 502 msgs : sensor_msgs/PointCloud2Winterwheat Path A, 2017-05-23-09-20

Example bag

All rosbags

Winterwheat Path B, 2017-05-23-09-49

Example bag

All rosbags

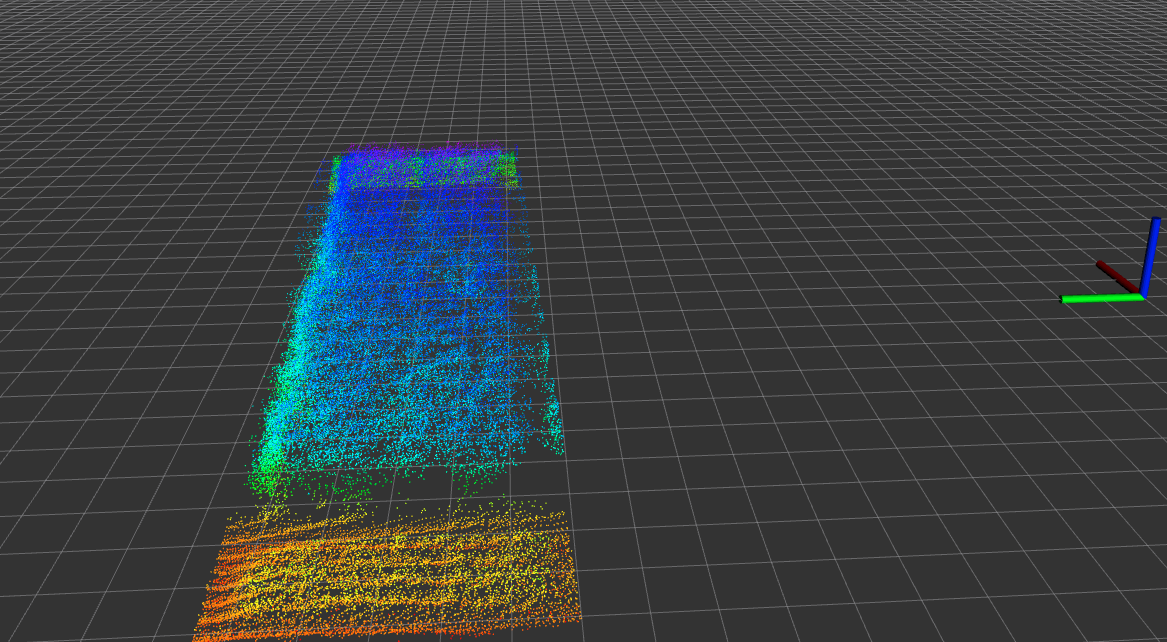

Example PCL point-clouds of crop parcels

We have included a number of parcel pointcloud examples,

to compare against your own results with the rosbags:

Example 1

Example 2

Example 3

Zip with 30 examples

Can also be open with the following online tool:

Lidar view online

Just remember to change to the format field to “X Y Z”.

Other reference material

Images from the experimental field:

Geotiff mosaic of the experimental field, after the data was recorded:

The reference points of the crop parcel corners and the nitrogen treatment type.

CSV with external Measurement from the field.

Note

All the information on this page is published in good faith and for general information purpose only. Our research group does not make any warranties about the completeness, reliability and accuracy of this information. Any action you take upon the information you find on this webpage, is strictly at your own risk.

Part of the following research projects

and