FieldSAFE – Dataset for Obstacle Detection in Agriculture

The FieldSAFE dataset is a novel multi-modal dataset for obstacle detection in agriculture. It comprises 2 hours of raw sensor data from a tractor-mounted sensor system in a grass mowing scenario in Denmark, October 2016.

Sensing modalities include stereo camera, thermal camera, web camera, 360-degree camera, lidar, and radar, while precise localization is available from fused IMU and GNSS. Both static and moving obstacles are present including humans, mannequin dolls, rocks, barrels, buildings, vehicles, and vegetation. All obstacles have ground truth object labels and geographic coordinates.

| Sensor | Model | Resolution | FOV / ° | Range / m | Datarate / fps |

|---|---|---|---|---|---|

| Stereo camera | Multisense S21 CMV2000 |

1024 x 544 | 85 x 50 | 1.5-50 | 10 |

| Web camera | Logitech HD Pro C920 | 1920 x 1080 | 70 x 43 | n/a | 20 |

| 360 camera | Giroptic 360cam | 2048 x 833 | 360 x 292 | n/a | 30 |

| Thermal camera | Flir A65, 13 mm lens | 640 x 512 | 45 x 37 | n/a | 30 |

| Lidar | Velodyne HDL-32E | 2172 x 32 | 360 x 40 | 1-100 | 10 |

| Radar | Delphi ESR | 16 targets/frame 16 targets/frame |

90 x 4.2 20 x 4.2 |

0-60 0-174 |

20 20 |

Usage

The entire dataset was recorded using the Robot Operating System (ROS) and is therefore contained in rosbags.

For detailed installation instructions and examples for how to use the dataset, see https://github.com/mikkelkh/FieldSAFE

In the ROS Details section below, ROS topics and data types for all sensors are listed along with a ROS transform tree.

Download

| Filename | Size / GB | Duration / mm:ss | Map | Description | |

|---|---|---|---|---|---|

|

static_map_rgb.png | 0.5 | n/a | Static RGB orthophoto of the field. | |

|

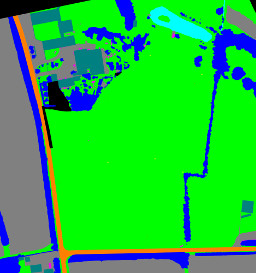

static_ground_truth.png | 0.002 | n/a | Static ground truth map the field. | |

|

2016-10-25-11-41-21_example.bag | 3.6 | 01:00 | 1 minute example bag. Extract from sequence with dynamic obstacles. | |

|

2016-10-25-11-09-42.bag | 73.9 | 17:25 |  |

Static obstacle session #1. 3 laps around the grass field with several static obstacle along the way. |

|

2016-10-25-11-34-25.bag | 26.6 | 06:00 |  |

Repositioning of the tractor. At the end, the setup is ready for recording dynamic obstacles. |

|

2016-10-25-11-41-21.bag | 91.3 | 21:54 |  |

Dynamic obstacle session #1. Humans move in random patterns, crossing the path of the tractor. |

|

2016-10-25-12-07-22.bag | 95.2 | 22:17 |  |

Dynamic obstacle session #2. Humans and a mini digger move in random patterns, crossing the path of the tractor. |

|

2016-10-25-12-37-57.bag | 112.2 | 26:05 |  |

Static obstacle session #2. The rest of the field is traversed and mowed, while passing a number of static obstacles. |

Ground Truth

Ground truth information on object location and class labels for both static and moving obstacles is available as timestamped global (geographic) coordinates.

By transforming local sensor data from the tractor into global coordinates, a simple look-up of class label into the annotated ground truth map is possible.

Static Obstacles

Orthophoto with tractor tracks overlaid. Black tracks include only static obstacles, whereas red and white tracks also have moving obstacles. Currently, red tracks have no ground truth for moving obstacles annotated. |

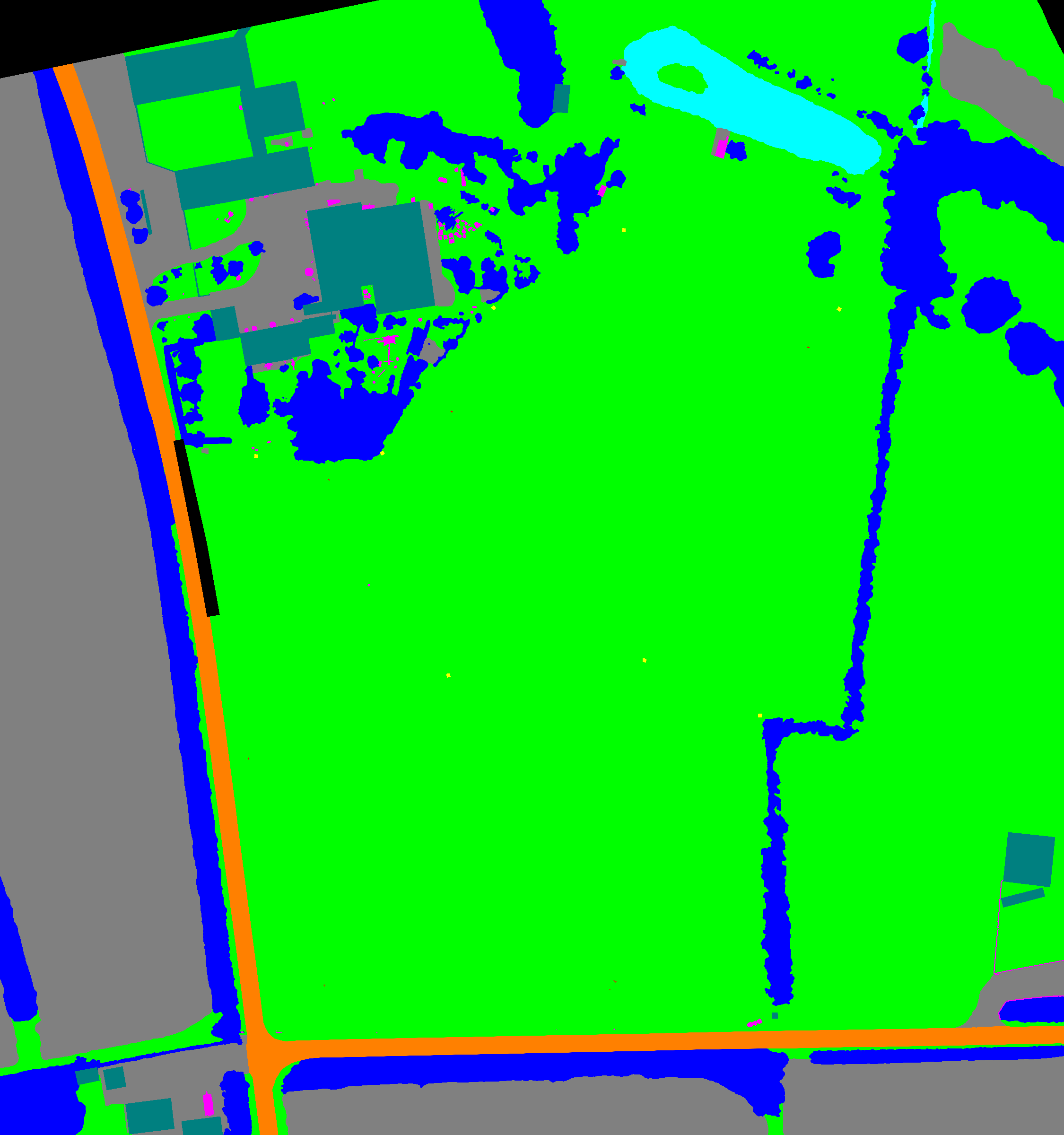

Labeled orthophoto. |

Dynamic Obstacles

Path of tractor and moving obstacles (humans) overlaid on the static ground truth map.

License

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International – Licens.

Citation

If you use this dataset in your research or elsewhere, please cite/reference the following paper:

FieldSAFE: Dataset for Obstacle Detection in Agriculture

@article{kragh2017fieldsafe,

AUTHOR = {Kragh, Mikkel Fly and Christiansen, Peter and Laursen, Morten Stigaard and Larsen, Morten and Steen, Kim Arild and Green, Ole and Karstoft, Henrik and Jørgensen, Rasmus Nyholm},

TITLE = {FieldSAFE: Dataset for Obstacle Detection in Agriculture},

JOURNAL = {Sensors},

VOLUME = {17},

YEAR = {2017},

NUMBER = {11},

ARTICLE NUMBER = {2579},

URL = {http://www.mdpi.com/1424-8220/17/11/2579},

ISSN = {1424-8220},

ABSTRACT = {In this paper, we present a multi-modal dataset for obstacle detection in agriculture. The dataset comprises approximately 2 h of raw sensor data from a tractor-mounted sensor system in a grass mowing scenario in Denmark, October 2016. Sensing modalities include stereo camera, thermal camera, web camera, 360 ∘ camera, LiDAR and radar, while precise localization is available from fused IMU and GNSS. Both static and moving obstacles are present, including humans, mannequin dolls, rocks, barrels, buildings, vehicles and vegetation. All obstacles have ground truth object labels and geographic coordinates.},

DOI = {10.3390/s17112579}

}

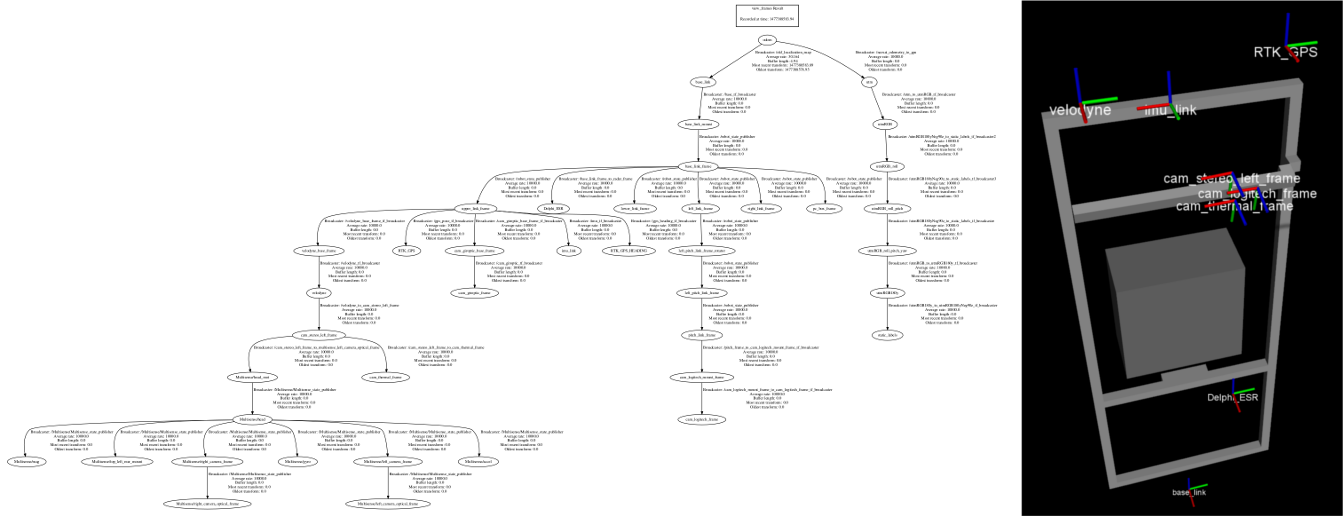

ROS Details

Below, ROS-specific details are given for which transforms and topics are available.

Extraction of the estimated extrinsic parameters for all sensors is described in the Transforms section.

Intrinsic camera parameters are described in the Camera Calibration section.

All available topics for each sensor are described in the Topics section.

More information and example code is available in the git repository.

Transforms

Below, a screenshot from rviz illustrates the sensor platform and sensor coordinate frames in ROS.

A full TF (transform) tree is available here.

To extract specific extrinsic parameters, the tf_echo package in ROS can be used.

Below, an example is given for finding translation and rotation between the lidar (velodyne) and the stereo camera (cam_stereo_left_frame).

The example requires the provided demo to be run in another terminal at the same time as described in the git repository.

rosrun tf tf_echo velodyne cam_stereo_left_frame

At time 0.000

- Translation: [0.012, 0.427, -0.295]

- Rotation: in Quaternion [0.564, -0.539, 0.428, -0.457]

in RPY (radian) [-1.788, 0.010, -1.517]

in RPY (degree) [-102.468, 0.590, -86.895]

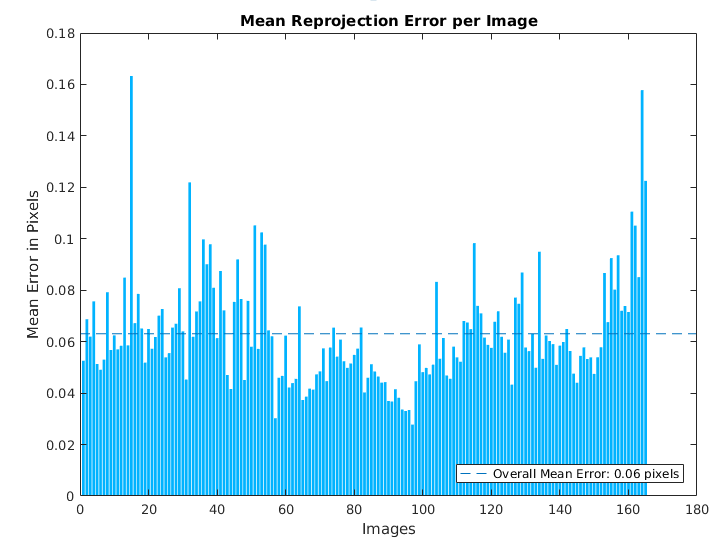

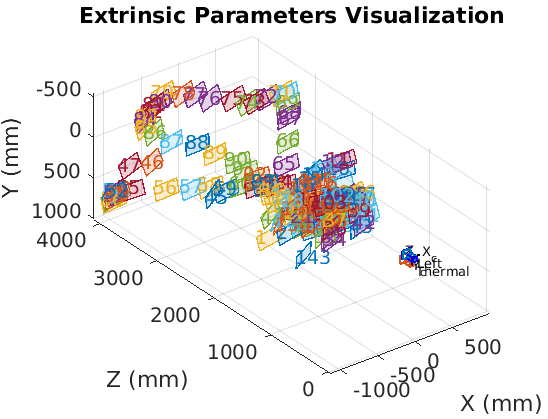

Camera Calibration

The stereo and thermal cameras have been registered and calibrated using the camera calibration method available in the Computer Vision System Toolbox in MATLAB.

The intrinsic camera parameters are available as MATLAB mat files for the stereo camera and the thermal camera.

Details for how to show reprojection errors and visualize extrinsic parameters are available from the MathWorks Documentation.

Topics

The recorded rosbags include a number of different topics for each sensor.

The table below lists all the topics and their message types in ROS.

| Sensor | Topics | Message type | Description |

|---|---|---|---|

| Stereo camera | /Multisense/depth | sensor_msgs/Image | Depth image |

| /Multisense/imu/accelerometer_vector | geometry_msgs/Vector3Stamped | Accelerometer data | |

| /Multisense/imu/gyroscope_vector | geometry_msgs/Vector3Stamped | Gyroscope data | |

| /Multisense/imu/imu_data | sensor_msgs/Imu | Packed accelerometer and gyroscope data | |

| /Multisense/imu/magnetometer_vector | geometry_msgs/Vector3Stamped | Magnetometer data | |

| /Multisense/left/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data (factory settings) | |

| /Multisense/left/image_rect_color | sensor_msgs/Image | Rectified left color image | |

| /Multisense/left/image_rect_color/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data (copy of above) | |

| /Multisense/pps | std_msgs/Time | Pulse per second signal | |

| /Multisense/right/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data (factory settings) | |

| /Multisense/right/image_rect | sensor_msgs/Image | Right grayscale image | |

| /Multisense/right/image_rect/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data (copy of above) | |

| /Multisense/stamped_pps | multisense_ros/StampedPps | Timestamped pulse per second signal | |

| /Multisense/status | multisense_ros/DeviceStatus | Device status message | |

| Web camera | /Logitech_webcam/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data |

| /Logitech_webcam/image_raw/compressed | sensor_msgs/CompressedImage | Compressed color image | |

| 360 camera | /giroptic_360/image/compressed | sensor_msgs/CompressedImage | Compressed color image |

| Thermal camera | /FlirA65/camera_info | sensor_msgs/CameraInfo | Intrinsic calibration data (do not use) |

| /FlirA65/image_raw | sensor_msgs/Image | Grayscale image | |

| Lidar | /velodyne_packets | velodyne_msgs/VelodyneScan | Raw laser data packets. Use provided software to generate 3D point clouds. |

| Radar | /fmData/can_rx_radar | msgs/can | Raw CAN messages |

| /Delphi_ESR/RadarData | htf_delphi_esr/Delphi_radar | Radar detections parsed from CAN messages | |

| GPS | /fmData/gps_rx | msgs/serial | Raw serial messages from GPS. Use provided software to generate sensor_msgs/NavSatFix messages. |

| IMU | /fmData/imu_rx | msgs/serial | Raw serial messages from IMU. Use provided software to generate sensor_msgs/Imu messages. |

| /fmData/imu_tx | msgs/serial | Raw serial message requests sent to the IMU. | |

| System | /diagnostics | diagnostic_msgs/DiagnosticArray | Diagnostics |

| /tf | tf/tfMessage | Outdated transform messages. Must be ignored when replaying bag. Use provided software to generate updated transforms. |

Note that intrinsic calibration data for cameras are included directly in ROS messages.

These values, however, are factory defaults and not the ones estimated during camera calibration.

For the calibrated values, see the Calibration section, above.

Below, an example is given for extracting the intrinsic parameters for the left image of the stereo camera.

The example requires the provided demo to be run in another terminal at the same time as described in the git repository.

rostopic echo /Multisense/left/camera_info

header:

seq: 21419

stamp:

secs: 1477388586

nsecs: 798077000

frame_id: /Multisense/left_camera_optical_frame

height: 544

width: 1024

distortion_model: plumb_bob

D: [0.0030753163155168295, 0.002497022273018956, 0.0003005412872880697, 0.001575434347614646, -0.003454494522884488, 0.0, 0.0, 0.0]

K: [555.9204711914062, 0.0, 498.1905517578125, 0.0, 556.6275634765625, 252.35089111328125, 0.0, 0.0, 1.0]

R: [0.9999634027481079, -0.000500216381624341, 0.00853759702295065, 0.0005011018947698176, 0.9999998807907104, -0.00010158627264900133, -0.00853754486888647, 0.00010586075950413942, 0.9999635219573975]

P: [580.6427001953125, 0.0, 512.0, 0.0, 0.0, 580.6427001953125, 254.5, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

---

Contact

For questions, contact Mikkel Fly Kragh at mkha@eng.au.dk